New XOSAN benchmarks

For those following us on Twitter or Facebook, you probably saw this recently:

The new lab is on the right. We have here:

- 5x Dell T30 (Xeon E3 V5, 32 GiB RAM, 1 TiB disk)

- 1x Ubnt 16x10GB/s switch for storage network

We'll benchmark XOSAN with this (decent but cheap) hardware!

XOSAN on cheap HDDs

In each Dell T30, we got a Toshiba DT01ACA100:

Check the specs:

- 7200 rpm

- SATA

- around 50€ only!

- no RAID controller, no cache into the Dell T30

Total "raw" capacity on 5 machines: 5 TiB. But XenServer is installed on each node, and left roughly 890 GiB available per host (~4,3 TiB usable in total)

But if you lose one host using a local storage, everything on that local storage is lost forever.

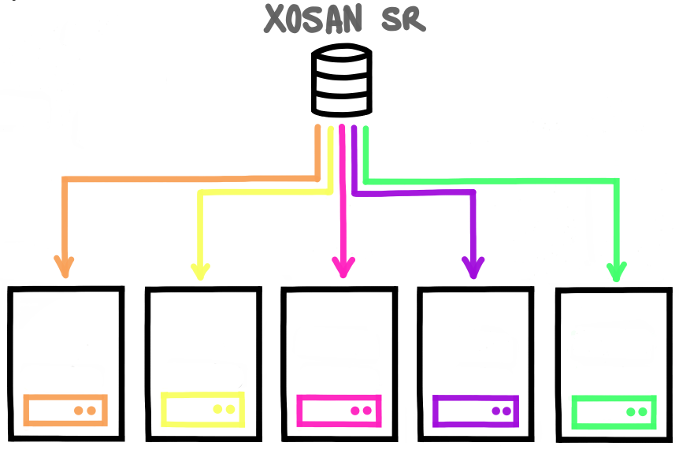

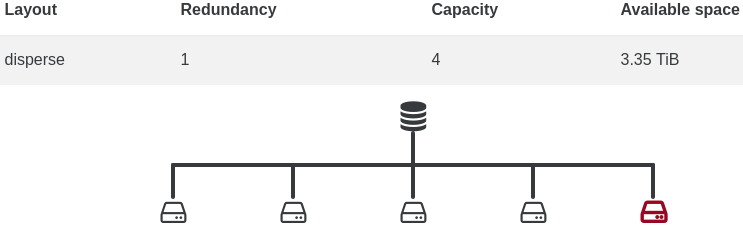

Disperse 5

Creating a XOSAN on those five nodes with disperse mode will make you lose only 20% of the overall capacity, so you'll still enjoy around 3,5 TiB available! And if you lose one host, the cluster will continue to work.

Here, you can lose 1 disk/node/XS host without losing your data, and in this case, without even service interruption!

Let's benchmark this!

Benchmarks

I added a benchmark when using one local SR only directly. This way, you'll know the performance trade off to enjoy a single converged shared storage!

FIO is used to make the benchmarks, on a Debian 9 VM. It's done on a 10GiB file (enough to avoid caching). Throughput is fetched from XenServer VDI RRDs values, which is pretty accurate and close to the "reality". IOPS are fetched from FIO directly. You can find some FIO examples in this Sam's blog post.

| Local SR | XOSAN Disperse 5 | |

|---|---|---|

| Sequential reads | 177 MB/s | 214 MB/s |

| Sequential writes | 174 MB/s | 106 MB/s |

| IOPS Random R/W 75% | 170/50 | 2500/835 |

As expected, we got a reduced performance for writes. Reads are better (because we can read on multiple disks at the same time).

IOPS huge boost

But the real interesting thing is about IOPS: in a 75% mixed read/write mode (which is usually a good "every day" usage), we got a tremendous boost against local SR. x15 is both read and write! That's because we can spread IOPS on various device.

And remember that in bonus you get:

- a shared SR (XenServer HA compatible!)

- you can handle one host down without any service interrupt

- all of this without extra hardware needed!

Replicated 2

In this case, you have 2 mirrors gathered in one resource (RAID0 of 2xRAID1 if you prefer, like RAID10).

This will lead to roughly 1.7 TiB available, but you can lose up to 2 disks/node/XenServer host (1 per mirror).

What about perfs?

Benchmarks

Same tests than Disperse, keeping previous result in order to compare:

| Local SR | XOSAN Disperse 5 | XOSAN Replicate 2 | |

|---|---|---|---|

| Sequential reads | 177 MB/s | 214 MB/s | 200 MB/s |

| Sequential writes | 174 MB/s | 106 MB/s | 500 MB/s |

| IOPS Random R/W 75% | 170/50 | 2500/835 | 425/141 |

Write speed to the roof

In this setup, we still beat the local HDD in terms of IOPS (by 2,5 times in read and 28 times in write). IOPS are less impressive than in disperse, but sequential writes are ultra high (spreading write into 2 mirrors without complicated computation).

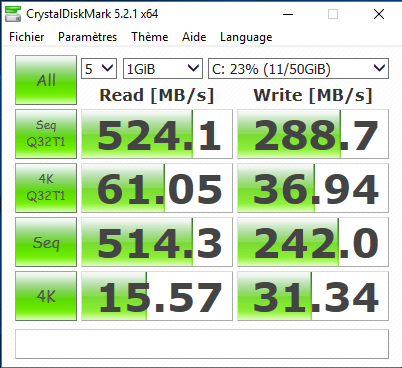

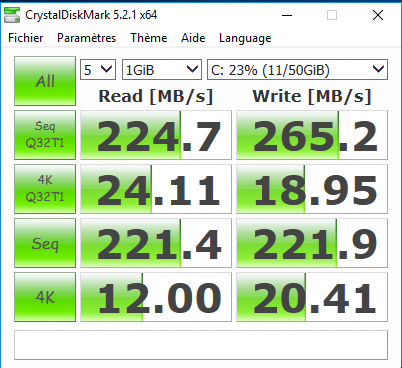

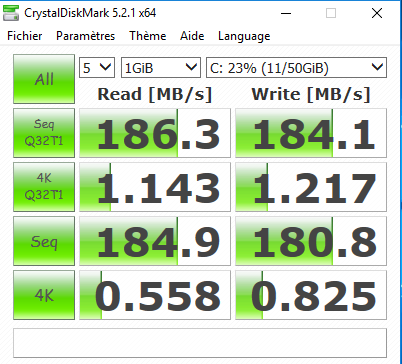

Bonus: Windows benchs

Quick benchs with Crystal DiskMark. For Replicated 2:

And Disperse 5:

And in comparison, the local storage:

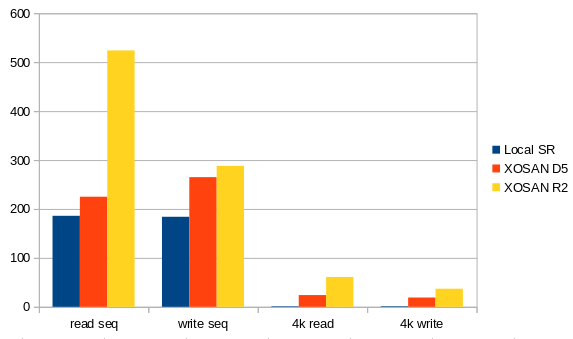

As you can see, local storage is way behind XOSAN. In a graph:

Conclusion

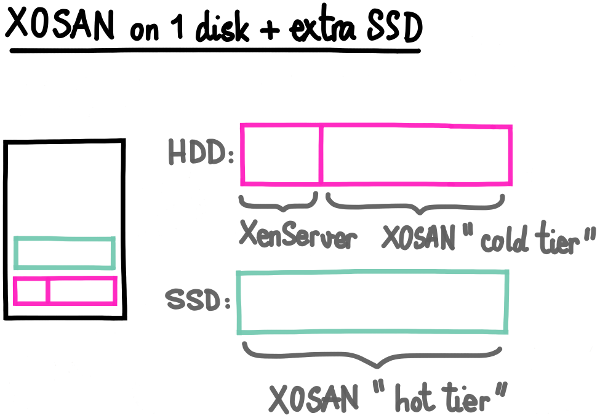

Remember in this case, we didn't cheat with a SSD cache or anything like that. There is some options to improve sequential workload, but that's it. If we take the disperse 5 example, we got a shared SR with the cost of:

- 6% of total RAM usage (2GiB per XOSAN VM)

- 20% disk overhead

But on this other hand:

- we improved the performances a LOT

- we got a virtual shared SR (XS HA possible)

- you can live migrate only the VM RAM between hosts!

- …which is resilient to one node failure!

- without any extra hardware to purchase!

XOSAN turned your 5 XenServer host into a storage solution, without consuming the compute power!

To read all our previous article on XOSAN, check our dedicated XOSAN tag.