Improving Xenserver storage performances with XOSAN

Thanks to XOSAN, you can dramatically improve your storage performance on your XenServer hosts, without any new hardware to buy and with only few clicks.

But how exactly?

Cheap hardware with high performances?

Let's do extensive testing with very accessible hardware:

- 5x Dell T30 (Xeon E3 V5, 32 GiB RAM, 1 TiB disk in each)

- HDDs are cheap Toshiba DT01ACA100 ($45!)

- 1x Ubnt 16x10GB/s switch for storage network

Those 5x Dell T30 hosts were used for this article

On the software side, we got:

- XenServer 7.1 as the Hypervisor

- Debian 9 VM to run the benchmarks (2 vCPUs, 4GiB RAM) in PVHVM mode

- XOSAN in Disperse 5 mode (you can lose 1 node without service interruption)

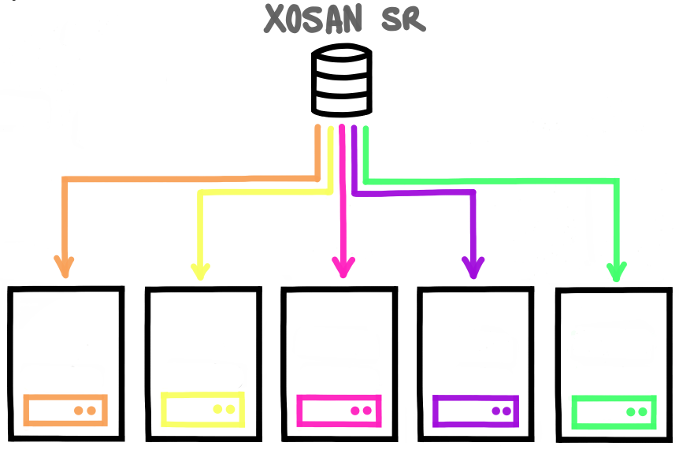

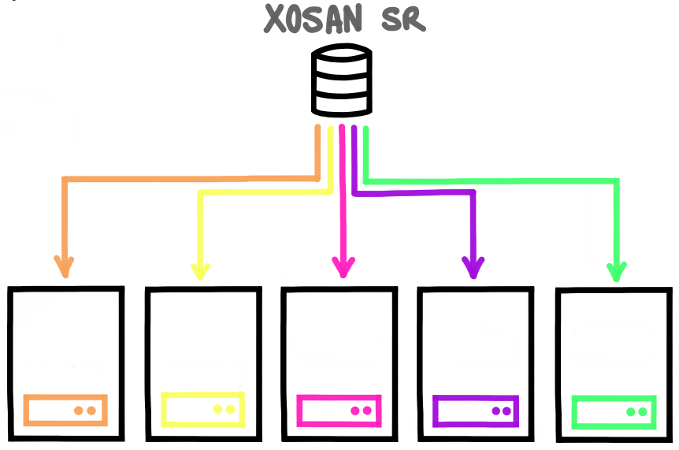

No extra hardware used, no external NAS or SAN, just the hyperconverged software XOSAN solution. All hosts are XenServer hypervisors able to run VM but also member of the XOSAN storage.

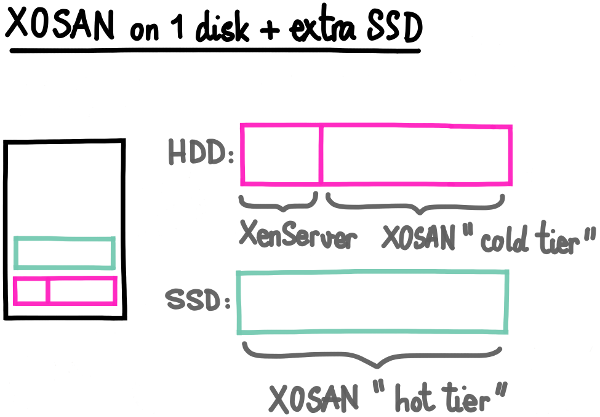

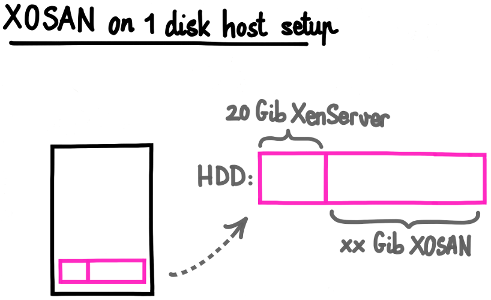

Note: XenServer is installed itself on the HDD, using around 60GiB on 1TiB. It's not a problem to use the same disk with the remaining space for XOSAN. You don't even need to buy new disks!

Flexibility and High Availability

Configuration is in Disperse 5, it means if one host is down, it will continue to work (read AND write) without any service interruption. And using 80% of the total disk space possible! (4TiB available on the 5x1TiB disks).

It also means that we have a virtual shared storage between each host, allowing:

- Live migration (only migrating RAM, so extremly fast)

- XenServer HA (reboot automatically all VMs that were on the disconnected/down host)

We could also add hosts in the future to grow our current XenServer and XOSAN setup:

- 6 nodes will allow disperse 6 with redundancy 2 (so allowing lost of 2 hosts) with 66% of total disk space available

- 8 nodes would allow disperse 8 with redundancy 2 with 75% of total disk space available

- and more!

But now, let's talk about performances!

Local disk vs XOSAN

We want to compare XOSAN performances against using the local HDD, obviously on the same VM.

Do we have to pay a performance penalty to have flexibility and HA? Let's check this!

Quick FIO benchmarks

This is just some quick FIO benchmark, on a 10G file, for seq reads and writes, plus the really interesting IOPS bench for the usual 75% R/W random load:

| Local disk | XOSAN | Difference | |

|---|---|---|---|

| Sequential reads | 165 MB/s | 286 MB/s | +73% |

| Sequential writes | 160 MB/s | 210 MB/s | +31% |

| IOPS Random R/W 75% | 154/51 | 4100/1400 | +2600% |

FIO benchmarks are clear: XOSAN is faster. What about more real load or apps?

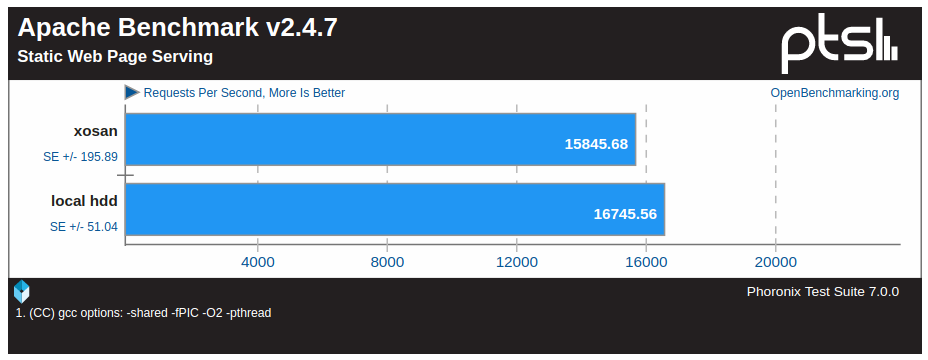

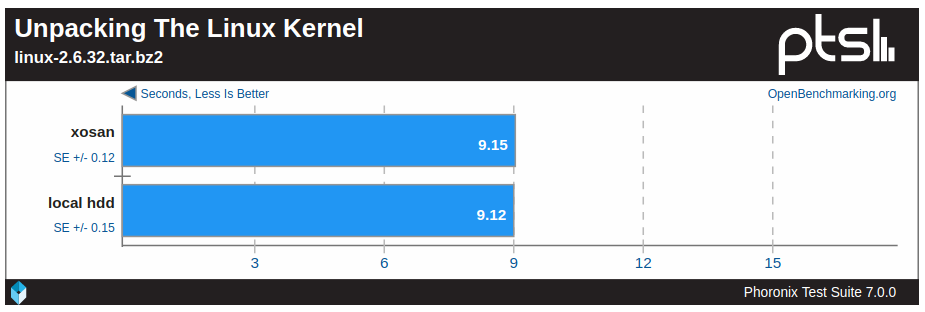

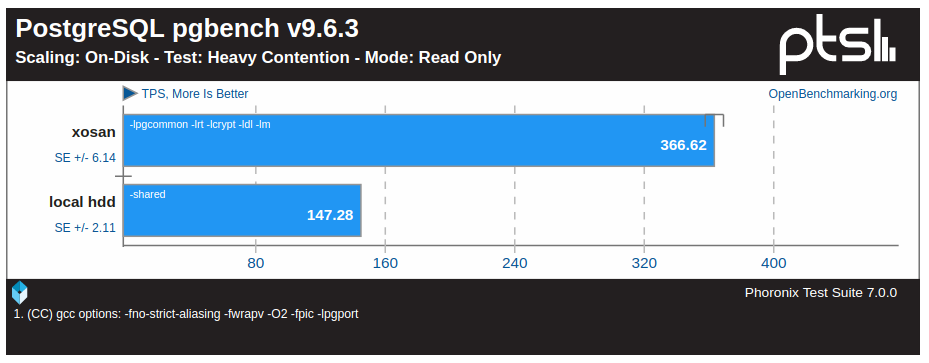

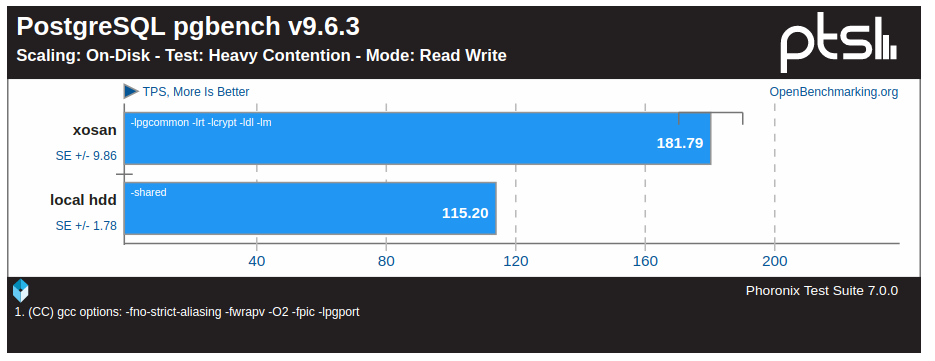

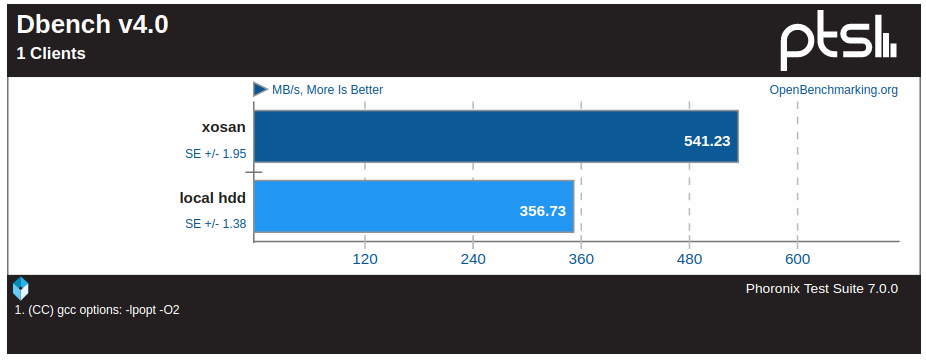

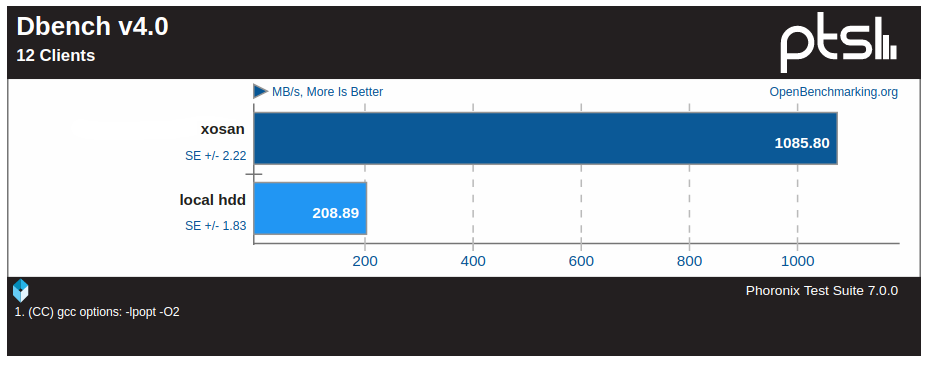

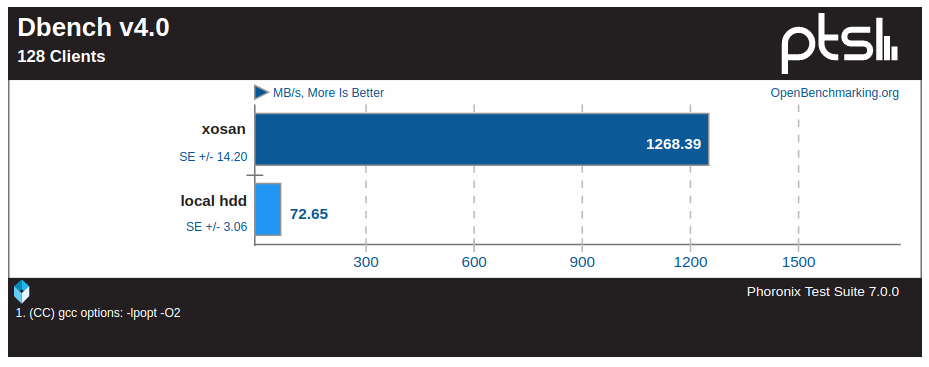

Phoronix Benchmarks

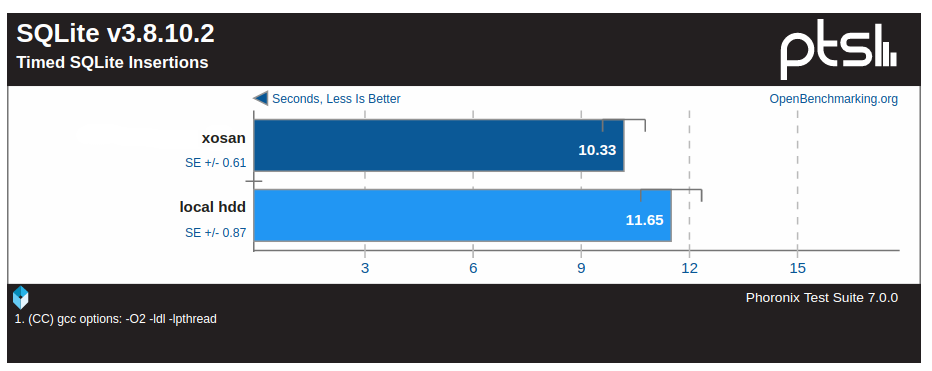

We made benchmarks using Phoronix Test Suit, which is perfect to make quick benchmarks on various applications. It will also help us to learn a bit more of XOSAN potential trade off.

XOSAN is a bit better, but the difference is not huge. Anyway, if you use SQLite, you'll have a slight boost with XOSAN (12% faster)

A first trade off? XOSAN is 5% behind local disk, maybe because working on a lot of small files?

Both systems are on par while unpacking a tarball. This is interesting: despite there is a lot of small files, there is no performance impact for XOSAN!

While using PostGres in "heavy contention", XOSAN is outperforming local disk a LOT, both in read and write mode.

More you add clients, better it is: XOSAN is 17 times faster than local disk. I think we can recap the DBENCH test with this GIF:

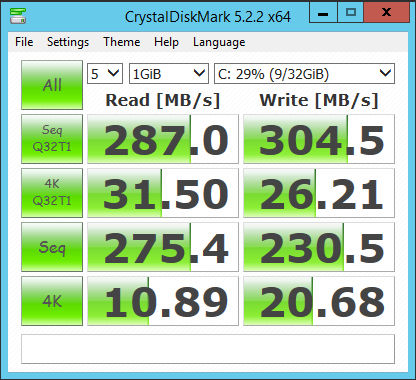

Bonus: Windows Benchmarks

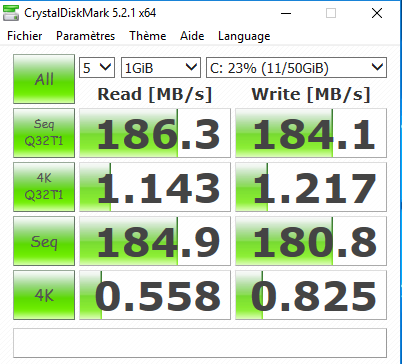

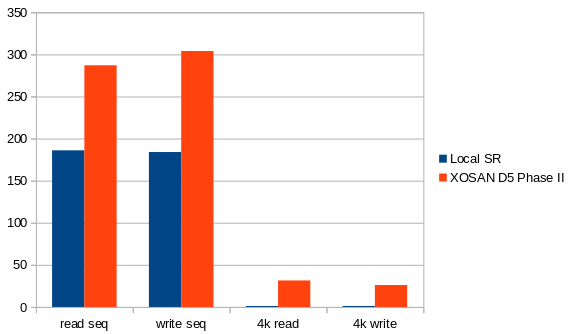

Just added in extra some bench using Crystal DiskMark:

XOSAN:

You can compare to the local disk itself:

Recap in a chart:

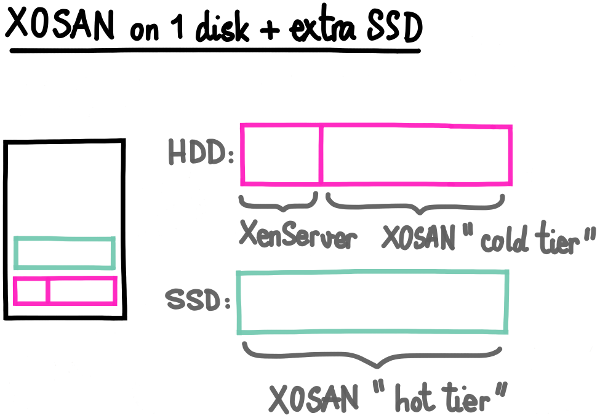

Going even beyond

But that's not all. We also planned to add new features in the coming month: tiering. It will mean we could add "small" SSDs for hot files. In other words, to go even faster than our current benchmarks.

Try it now!

XOSAN is already available in Beta phase II. So you'll be able to test it on your existing XenServer infrastructure :) You can register to the free beta following those simple instructions.