Case study #2 - XOA at the Geneva Gaming Convention

Interview with the network coordinator - Romain Pfund

This interview is translated from French.

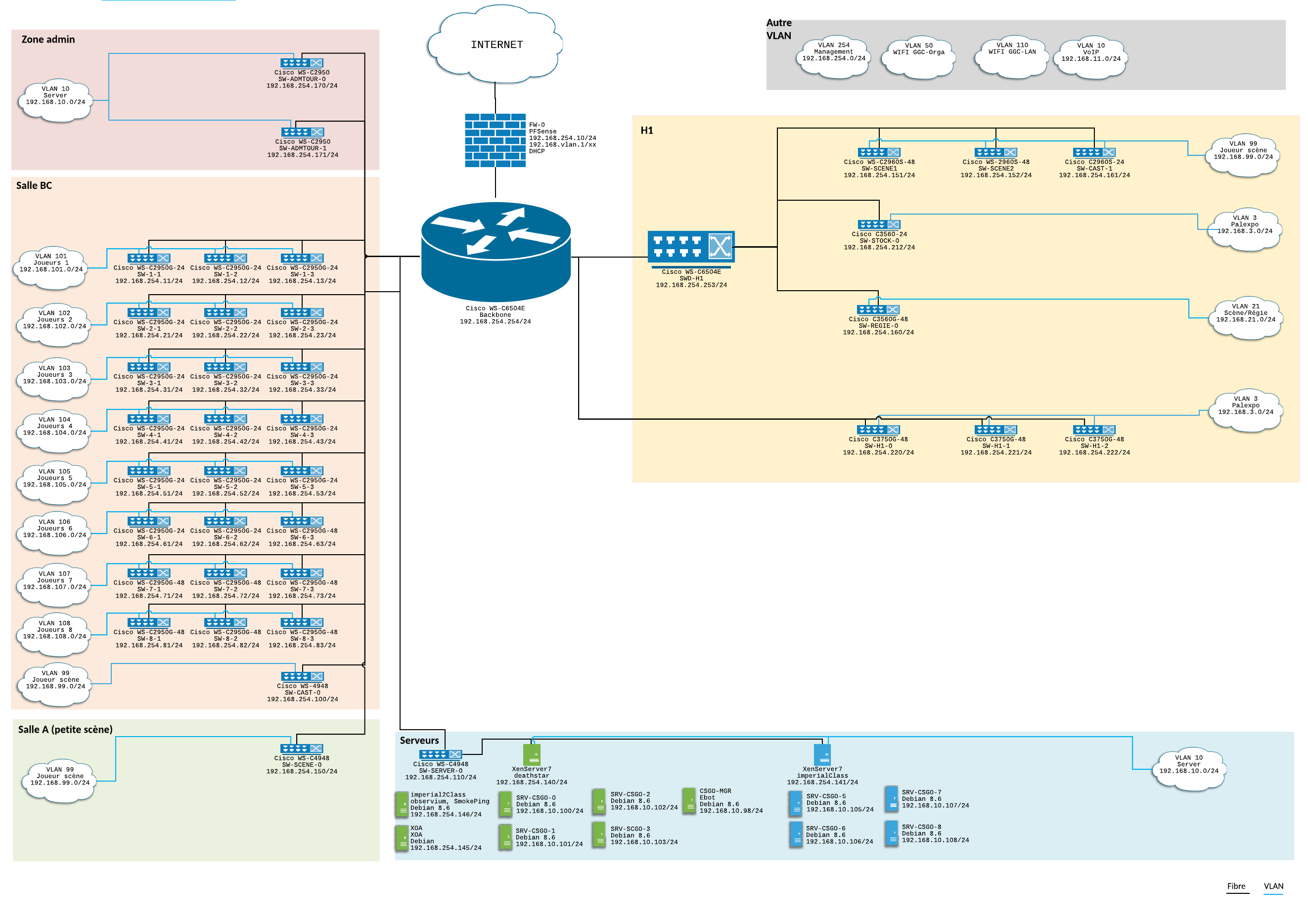

Can you present to us the infrastructure for the GGC?

The GGC takes place in the Palexpo in Geneva and is split in two parts:

- the Hall 1 with the exposition and the "big scene"

- the LAN and the "small scene" in the Congress Center

Regarding the network infrastructure, we have 39 Cisco switches, 1 PFSense firewall, 1 Beaglebone BLACK and 2 physical servers. Most of the hardware is lent by several associations that are part of the collective, but also by some individuals and companies from the region Geneva/Vaud. We are a small team of volunteers with 6 computer scientists for the preparation, deployment and maintenance during the event.

For the small scene and the LAN, we have 24 switches for players, 2 switches for admins, 1 for the cast, 1 for the scene and 1 for the network administration and servers. Everything is connected with optical fiber channels.

For the Hall 1, we have 3 switches for various exhibitors connected to the network via the Palexpo infrastructure (QinQ). For the Big scene, we have a distribution switch connected with a direct optical fiber from our head of control. This switch is connected (still optical fiber) to 2 switches for the players, 1 for the cast tower, 1 for the control room and finally 1 for the backstage.

Regarding the physical servers, we chose XenServer because it was OpenSource and the features met our expectations. For the Management, we chose Xen Orchestra. We were seduced by its user friendly aspect, the ease of deployment, the completeness of the tool and finally because it was a solution "close to us". (Note: Xen Orchestra is based in Grenoble, not far from Geneva)

Our main VMs are:

- XO virtual Appliance

- Debian with Observium and SmokePing for the network monitoring

- Debian VM for Ebot (dedicated for the CS:GO matches management)

- 8 Debian VMs for CS:GO servers (4 per host)

Finally, the Beaglebone BLACK is useful for routing broadcasts between VLANs (mandatory for some games), we could have virtualized this feature, but we chose to keep it this way to experiment.

Which are the obligations you have to take care of for this event?

Specifications were to provide all the network infrastructure to host:

- a LAN with 500 players maximum (finally we got 380 players);

- being able to provide servers for 8 CS:GO matches at the same time + the management of these matches;

- 16 computers for the big scene for the finals (including casters and players);

- provide the stage system (eg. sound via Dante...);

- 12 computers for the small scene;

- 1 Wi-Fi network for the players, 1 Wi-Fi network for the admins;

- 1 public Wi-Fi for the exponent and 1 for the visitors (managed by Palexpo).

And we have some constraints:

- the infrastructure had to be stable 24/24 during the whole event;

- players cannot be more than 10 meters away from a switch;

- our cable management must not be visible or bother people;

- deliver 10/100/1000 for every stage and casters;

- use the Palexpo infrastructure for the Wi-Fi.

Which XO features helped you to accomplish your mission?

The first feature that helped us and made us save time was the VMs import (from VirtualBox). We had many VMs ready (eg. for the network monitoring) - it would have cost us a lot of time to recreate them. The physical servers patching helped us too.

We also used the console access a lot for network configuration of the imported or duplicated VMs.

Regarding the configuration of CS:GO servers, snapshots were very useful. The cloning of VM via a snapshot has also been very helpful during the live duplication of these servers.

We appreciated the ease of declaration of remotes as well as the creation of Library for ISO, and the backups features.

The ease of utilization and the quick response of the XO features were very appreciated, as we are all volunteers, we are preparing the event on our free time.

Are there some features you would like to experiment next year?

Our servers are second hand and I would like to experiment Disaster Recovery, for some VMs, to a third physical server.

On the other hand, XOSAN seems interesting (depends on the duration of the event and its growth).

Finally, ACLs to create dedicated access for CS:GO admins so they can manage their VMs independently.