Xen Orchestra 5.76

Xen Orchestra 5.76 has landed. Discover what's new!

Happy Halloween! 🎃 This month, many new features and improvements are on the menu. Is it a monster release? We'll leave it up to you to judge 👻

🚅 Faster backups (preview)

Almost one year ago, we started to work on massive improvements for our backup system. First, the S3 compatibility with our new Backup Repository (BR) format, storing 2MiB files instead of one big VHD. Thanks to this, we managed to provide ultra-fast backup merges, native compression, and encryption. But also, in the following months: a new tiering system, deduplication, instant restore, immutability and much more (stay tuned!)

There's also another opportunity given by this kind of "2MiB file splitting": fetching blocks in parallel. However, parallel fetching is not possible with the default VHD export capability. So what? Well, we can use something else: NBD.

NBD: Network Block Device

NBD is a network protocol that can be used to expose a block device (ie your VM disk) to another machine (your Xen Orchestra appliance). See the Wikipedia page for more details. It's not new, but it's entirely open and documented, plus also available inside XenServer and XCP-ng. It must be enabled manually (see below for a how-to if you want to test the feature).

What's nice with NBD? Well, you can access any block on the device, and read them in parallel if you like. Interesting, isn't it?

Targeting the right blocks

However, having the whole drive visible in blocks is useless if you want to do a delta backup. That's why we'll still rely on the VHD format, just to take a look at the Block Allocation Table (BAT), to discover modified blocks.

In other words, an exposed VHD will become useful only to read what changed, and then we'll use the NBD handler to download the blocks we need. And we'll be doing this while fetching data in parallel (downloading up to 16 different blocks at the same time!)

Immediate benefits

Our initial tests are showing multiple benefits. First, the max backup speed is higher. Indeed, parallelism is providing a nice boost on transfer! We are also seeing a reduced load on the Dom0 while doing the export (vs the usual VHD method). Obviously, this depends a lot on your setup, and we'll provide more benchmarks in the future.

Other opportunities and next steps

The other perk with NBD download is the ability to read a block device the way you want (at any position). This means that we could provide a way to resume interrupted backups! This is a big deal when you have a failure on a large transfer.

In the future, we'd like to add other improvements:

- Display in the UI if your backup is done using this NBD option or not

- Making the block size configurable: it's 2MiB today, but early benchmarks are showing improved speed on smaller blocks, depending on various factors: that's why the ability to configure the block size will allow you to find the sweet spot for your very own use case.

How to test it

So, you want to take a look yourself? You need to enable NBD on your pool first (in fact, on a pool network). You can read this documentation for more details, but in short:

xe network-param-add param-name=purpose param-key=nbd uuid=<network-uuid>This will enable NBD on your targeted network, where XO is pulling the backups. XO is using TLS by default, so the transfer is secure. In case you want to benchmark the difference, you can also disable TLS with "insecure NBD", but it's obviously not recommended for production usage.

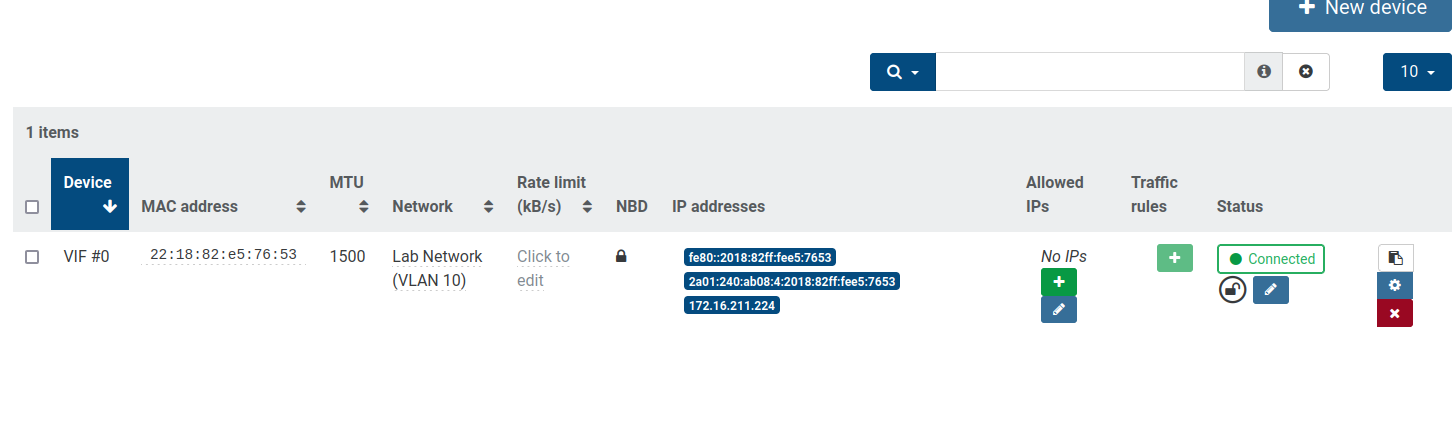

All your virtual interfaces on this network will be visible with a "lock" in the new "NBD" row, eg from a VM network view:

Now you can edit your XO config.toml file (near the end of it) with:

[backups]

useNbd = trueAnd don't forget to restart xo-server after that! Remember that it works ONLY with a remote (Backup Repository) configured with "multiple data blocks".

That's it, you are ready to test it!

☁️ Cloud config drive removal after VM creation

When using cloud-init config, we create a small drive that contains the configuration you defined in the VM creation screen. For most users, this disk is no longer useful after the config is actually done and the VM boots for the first time.

So we simply added an option allowing the user to remove that disk automatically after the VM creation.

🔐 Improved backup encryption

After our initial release of backup encryption, we replaced the original encryption algorithm AES-256-CBC by AES-256-GCM instead. This was needed to avoid a potential padding oracle attack, targeting the "CBC" part. You can learn more about the Padding Oracle attack article on Wikipedia.

Note that AES-256-GCM is -of course- not affected by this kind of attacks.

Security process

Through this example, we wanted to remind you we have a security policy in place so anyone can make a report. All requests are investigated very seriously. We also have a similar process for XCP-ng, see https://xcp-ng.org/docs/security.html

↔️ Evolution of our cache system for VM restore

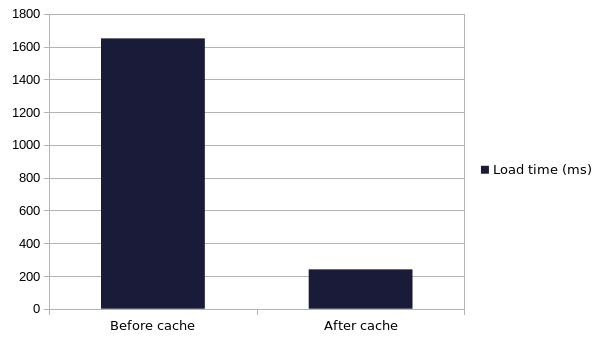

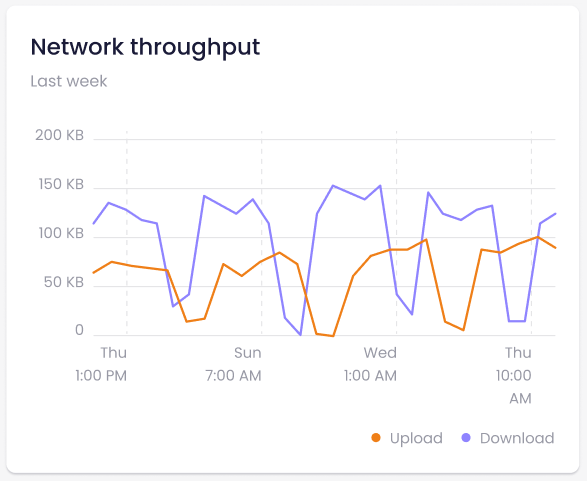

We improved our system to fetch existing VM backup information, in order to display everything fast inside your Xen Orchestra web UI. And it's a lot faster, see for yourself:

You can read more here:

🔭 XO Lite

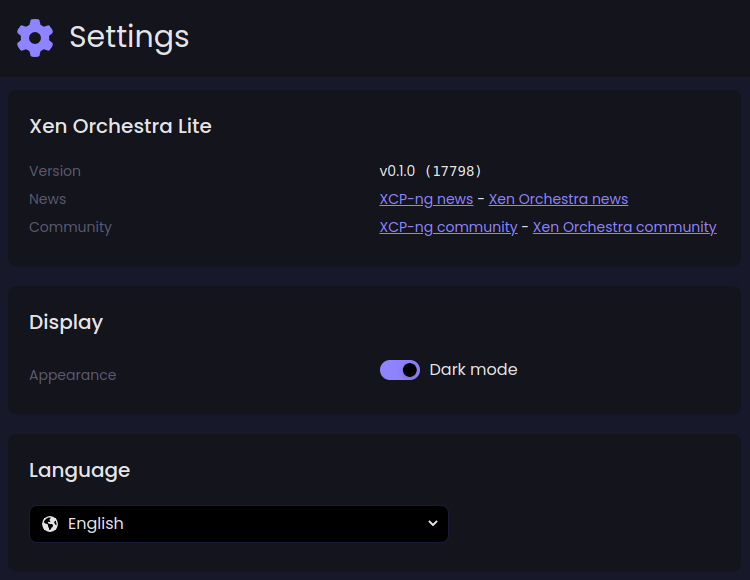

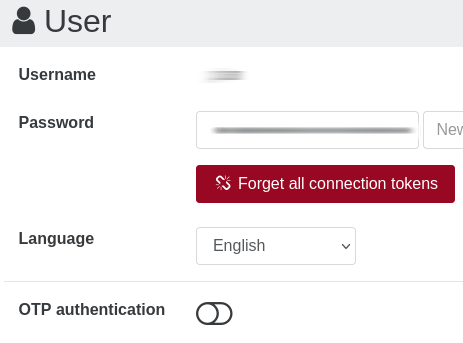

This month, and as promised, we delivered some new components and views. First, you now have a dedicated "settings page" with language selection, dark/light mode selection and various information:

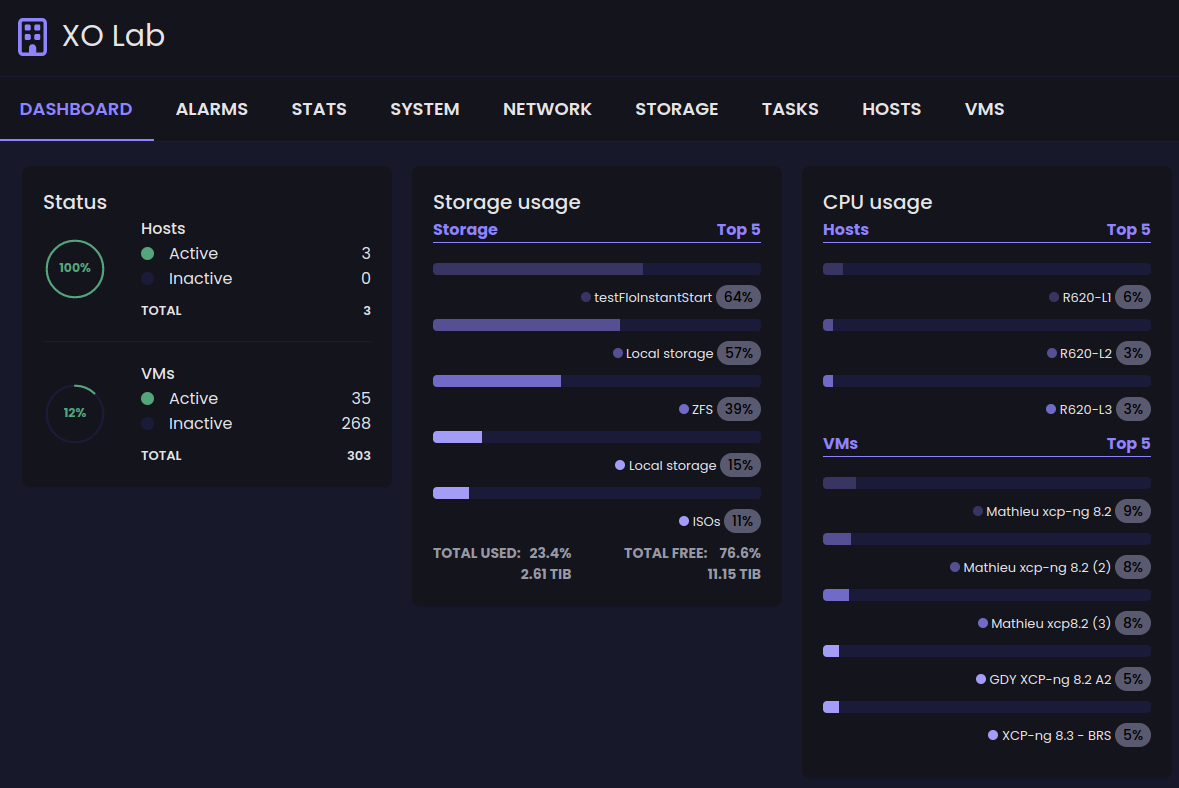

We are also making progress on the Dashboard page for your pool, adding CPU usage info for your hosts and your VMs:

Coming soon

The next steps on XO Lite are:

- Providing actions for all your VMs (start, shutdown, reboot, snapshot…)

- Adding resource graphs for CPU, memory and so on. Our components are almost done!

🎫 XCP-ng support license binding

Until now, it wasn't convenient to know which hosts were being protected by our XCP-ng Pro Support. That's why we decided to allow our customers to assign their existing (or future) XCP-ng support licenses to the host/pool of their choice, directly from the Xen Orchestra web UI.

Then, to make it easier, you can assign support to an entire pool automatically:

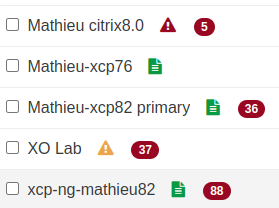

You can see here, with a green "file icon", pools with a valid pro support contract:

It's also possible to move a license from one host to another (in case of machine replacement for example), in just a few clicks. The whole system was meant to help you to track your supported hosts, not to be in your way. This is also a good opportunity to remind you about our vision on our pro support:

🕒 XOA Check: adding NTP sync

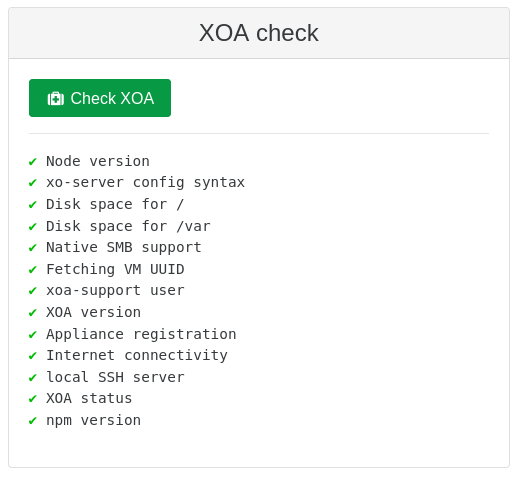

"XOA Check" is a feature available inside our turkey Xen Orchestra appliance. We already cover various checks to be sure your XOA is running in an optimal fashion, but sometimes we add another verification when we discover a problem.

In this case, it was an issue related to our Time-based One Time Password (TOTP) feature in Xen Orchestra: for our customer, it simply didn't work. In fact, it wasn't XOA's fault but it was very hard and subtle problem.

We realized then that the XOA NTP client was enabled, but unable to reach any public NTP servers (and no other NTP server was configured). Since TOTP is a time sensitive algorithm, this created the issue. That's why we decided to consider NTP important enough to be checked in our "checklist".

🗞️ XO logs to external syslog

Since this release, all your XO logs can now be sent to an external syslog server! This is very useful when you want to centralize all your logs in one place, so you can easily analyze them, without having to connect to all your machines one by one. It's also a great plus to find common patterns and correlation for some events, helping you greatly to debug some issues. Or even intrusions, since the logs are sent somewhere else, they can be removed locally but they will stay on your destination machine!

In order to enable it, you just have to add this to your configuration file:

[logs.transport.syslog]

target = 'tcp://syslog.example.org:514'

In short, all the logs you can see from journalctl -u xo-server will be forwarded to your central syslog server. This will work with any syslog server, like Rsyslog, Splunk, Logstash, Graylog… You name it!