Xen Orchestra 5.57

With Xen Orchestra 5.57 we continue the work started with version 5.56 on the improvement of the backup features in the solution.

With Xen Orchestra 5.57 we continue the work we started with version 5.56, on the improvement of backup features in the appliance. We are also starting to work on our Load Balancing plugin to make it more like a Resource Scheduler.

Also, our beta for the upcoming new product, XOSTOR, is coming soon.

Note: the actual release is still in progress as we write those lines. Expect the availability later tonight!

XOSTOR Beta

XOSTOR is the virtual SAN and software hyperconvergence solution we are working on in partnership with LINBIT, specialist of the DRBD technology.

The beta is going to start very soon and, if you are interested in testing the solution, you should register your email in our waiting list, on this page.

Backup improvements

Xen Orchestra 5.57 is the second wave of our backup improvements. The major improvements this month will be the backup workers in xo-server:

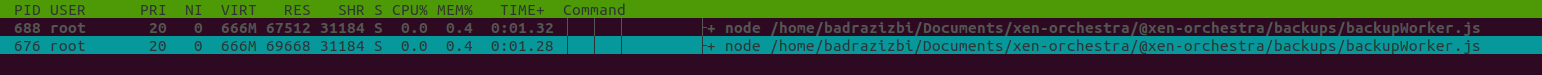

Backup workers in xo-server

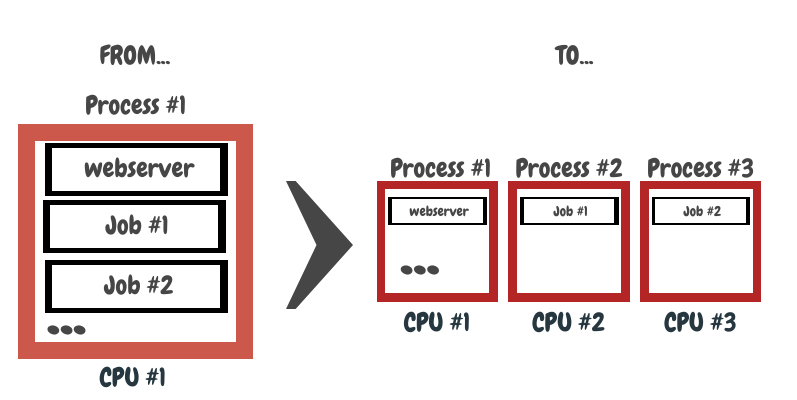

One job - one process.

Currently, running a whole backup job will create a single large process. The bigger the job is, the heavier the process will be. Consequently, if a part of the backup job is not working, it will often bring the whole process to fail. Additionally, a very large process can also create performance issues and cannot run on multiple CPU cores.

Backup workers in Xen Orchestra will allow us to split each backup job into a different process. It should bring multiple benefits:

- XOA should be more responsive when large backup jobs are running.

- A single part of the job crashing won't stop the whole backup job, only the faulty process

- Backup performance will be less CPU limited as multiple processes means that you can add more vCPUs to get faster backups.

Better handling of disabled/unreachable targets

When a backup job is launched with an unreachable or disabled target (SR or remote), the job won't be cancelled anymore with a failure. Instead, the job will continue for all available targets.

At the end of the job, you will have a failure status for all tasks that couldn't be performed because of an unreachable target.

Automatically clean orphan objects from VMs

When doing support in some users infrastructure, our team was often using a script allowing them to remove all orphaned objects tied to a backup job previously failed or cancelled (eg. snapshot unused because the job failed before the export).

We have now integrated a variation of this script in our backup code in order to clean VMs from these unecessary objects automatically.

Easy setup for another network interface

Some of you are using multiple network interfaces, usually one for the SAN or NAS connection and one for the appliance. Until now, configuring multiple network interfaces in Xen Orchestra required the editing of the system interfaces file, and, therefore some knowledge in Linux configuration.

To make it easier, we have added a simpler method to setup another interface, using a simple script.

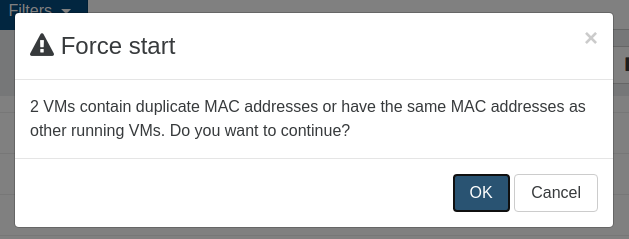

Avoid booting VMs with duplicated MAC address

To avoid issues in your infrastructure, you will now get an alert when you try to boot a VM using a MAC address already in use in your infrastructure.

You can still force the boot of the VM if you want to, at your own risk.

Anti-affinity in Load Balancer

The Load Balancer in Xen Orchestra is becoming more and more of a Resource Scheduler. The plugin will likely change it's name in one of our future releases consequently to become XORS.

Anti-affinity in the Load Balancer will allow you to avoid VMs with the same chosen tag to be run on the same host. This way, you will avoid having pairs of redundant VMs or similar put on the same host.

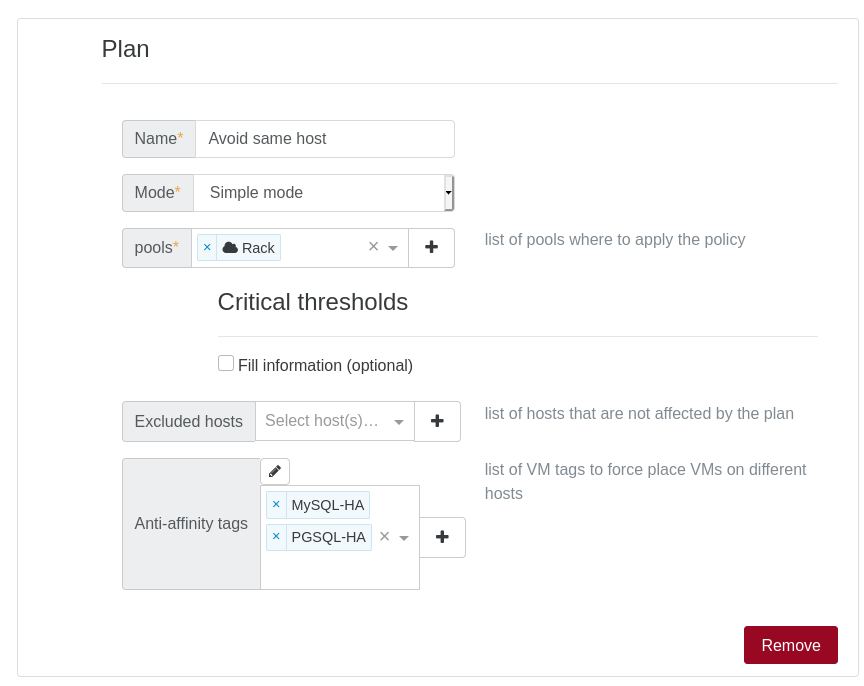

Let's see a simple example: you have multiple VMs using MySQL and Postgresql with high availability/replication. Obviously, you don't want to lose the replicated database inside VMs on the same physical host. Just create your plan like this:

- Simple plan: means no active load balancing mechanism here.

- Anti affinity: we added our 2x tags, meaning any VMs with one of these tags will never run on the same host (as possible) with another VM having the same tag.