DevBlog #2 - XO SDN controller

We are working on a new XO plugin: a SDN controller allowing to create pool-wide private networks.

What is an SDN controller?

An SDN controller (Software-Defined Networking controller) is a tool allowing you to manage software network bridges (i.e: OpenVSwitch).

A bridge works well alone, however when there's many of them it can be useful to have a centralized controller that manages all of the bridges in an orchestrated way.

Why create an SDN controller?

Networking on Xen is quite easy and scalable, yet one feature was missing: creating a private network amongst all hosts of a pool.

It was possible to create a private network on a specific host for all its VMs but not for higher scales. This introduces some issues as it's only on one host - the private network can't handle VM migrations to/from other hosts, plus if the host goes down, so does the network.

Hence the need to create a tool enabling more scalability with private networks was realized, the solution is an SDN controller.

After searching for existing solutions (OpenDayLight, Project Floodlight, etc) we decided to create our own SDN controller, so that it would be better incorporated into our XOA solution.

Indeed, already existing open source controllers are too complex for our needs, we prefer to develop our own small and simple solution.

Citrix offers a similar solution for Citrix Hypervisor (XenServer): the Distributed Virtual Switch Controller (DVSC) appliance. However this solution also has some issues that led us to make our own:

- it is closed source

- it seems to be usable only in internet explorer

- it is an appliance, therefore you're required to install it as a VM

- it uses an active SSL connection with the OVSDB server of the virtual switches, it means the OVSDB server can be connected to only one controller as it is bound to one IP

- it uses the

--bootstrap-ca-certoption of the OVSDB server to give its SSL certificate, this is weak against man in the middle (MitM) attacks - from our testing experience, it is not very robust against restart/stop of the pool's hosts

For all of these reasons implementing our own solution seemed to be the best option. The solution we're working on solved all the problems above:

- it is open source

- it is incorporated with XO therefore usable on any web browser, and doesn't require you to install a new VM

- it uses a passive SSL connection on the standard IANA OVSDB port (6640), therefore many SDN controllers can share the control of a pool as long as they're signed with the same CA certificate

- the SSL CA certificate is installed on the hosts through XAPI (

pool.certificate_install,pool.certificate_sync), it means if the XAPI connection is over HTTPS then a MitM attack won't work - As for robustness against host restart/stop, we tested it thoroughly and it seems to work well, we are waiting for feedback from our community, when the beta will be available, to improve it if necessary

How does it work?

First let's define what we mean by private network, we want a network that is:

- reachable by all the hosts in a pool

- unreachable by anything outside the network

- reactive when the pool changes (new host, host ejected,

PIFunplugged etc)

Creation of the network

Now, we can start creating the controller.

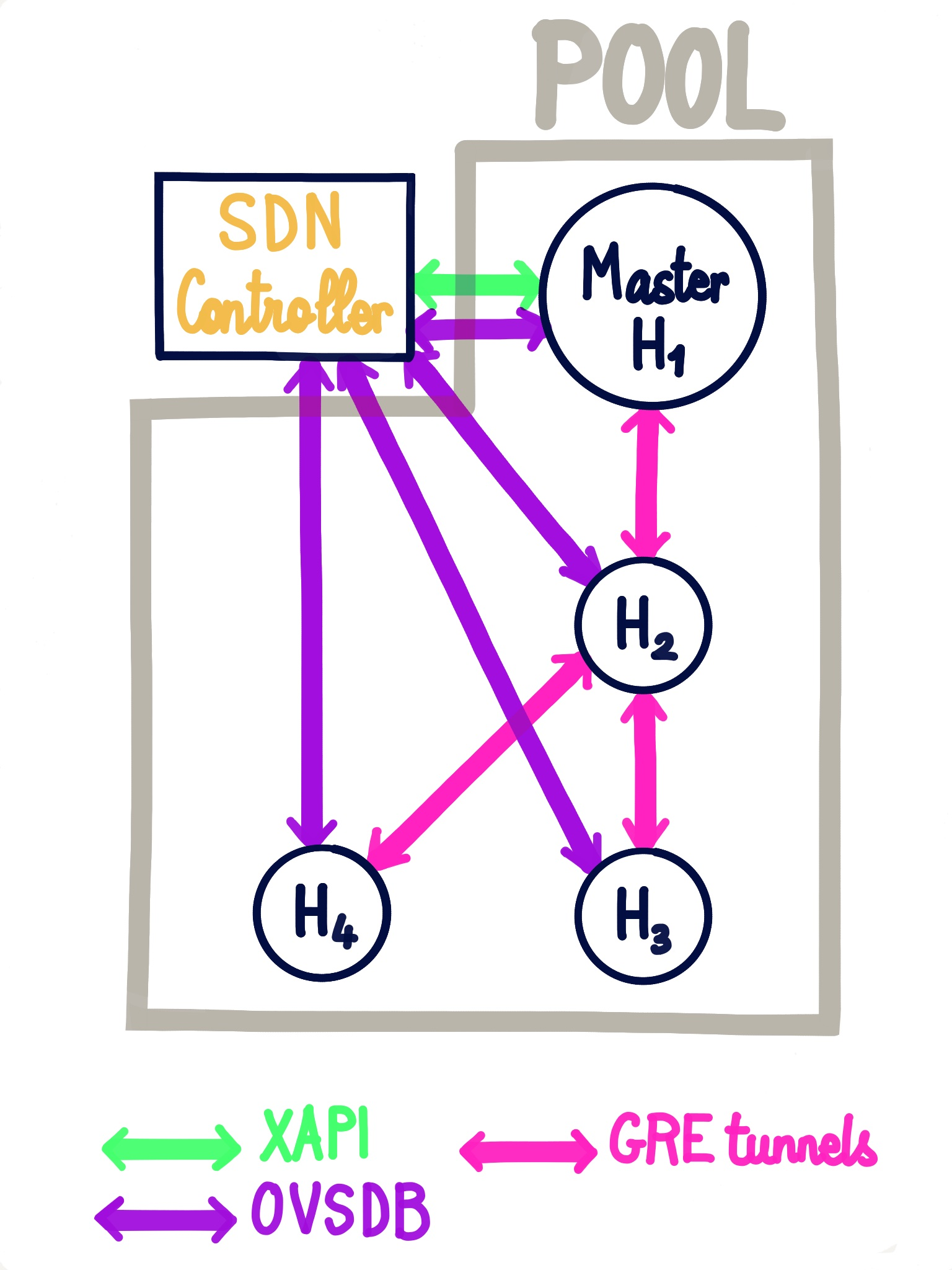

Let's have a pool and its hosts. In order to create the network and the associated PIFs, the controller needs an XAPI connection to the pool's master. For creating interfaces and ports and the OpenVSwitch (OVS) bridges it also needs an OVSDB connection to each host.

Once all the connections have been created, the fun begins. The controller creates a tunnel on each host, a tunnel is a "fake" PIF (not physical) simulated over a physical PIF. This tunnels will be the PIFs of the network.

After that we need to connect all the hosts' bridges together. We chose a star topology instead of mesh to avoid loops in the network, as mesh would require us to use Spanning Tree Protocol which complicates the solution. To create the topology, a star-center is elected, all other hosts are connected to star-center via OVSDB: a port and interface connecting via a tunnel (either GRE or VxLAN) to the star-center is added to the bridge corresponding to the private network.

Here's an example with a pool of 4 hosts connected via GRE tunnels: H1(master), H2(star-center), H3 & H4.

Maintaining the network

Once the star topology is created, we need to keep it in sync with the pool's events.

When a PIF is unplugged or a host is removed/stopped OVS removes the ports and interfaces automatically. Same for all VM events: additions, removals, migrations, VIF connections and deconnections, they're all managed by OVS.

The cases to handle:

- a new host is added to the pool: the controller adds a tunnel to the new host and add the corresponding ports and interfaces to add it to the star topology.

- a stopped host is started: if the host has a tunnel for the network, the tunnel is plugged and the corresponding ports and interfaces are added.

- a tunnel

PIFis plugged: the corresponding ports and interfaces are added. - Removal/Disconnection of the star-center (or its

PIF): as said before, the removals of ports and interfaces are done automatically, however the star-center of the topology is the backbone of the network therefore we need to elect a new star-center and recreate the star topology from scratch with all the remaining hosts.

What about the future?

- The private networks created by the SDN controller are isolated, the network could also be cyphered with

IPSEC.