XenServer Hyperconvergence

Have you heard about hyperconvergence? Let's (re)discover it and explore what's possible to do with XenServer and Xen Orchestra.

EDIT: Discover XOSAN, our software defined solution for XenServer hyperconvergence

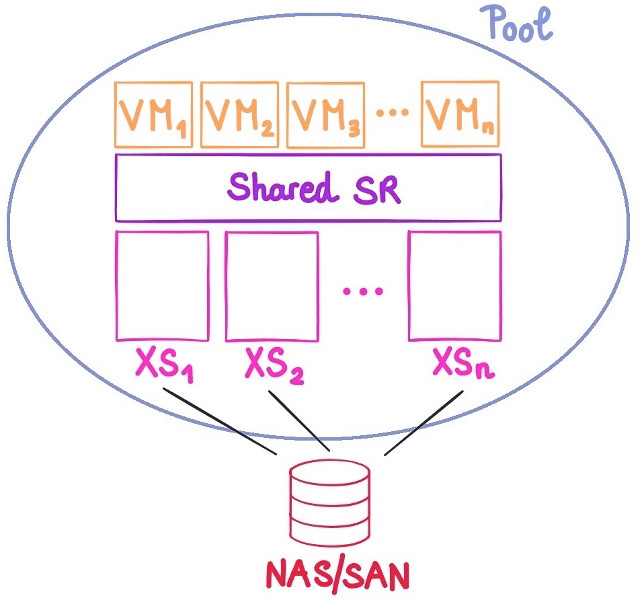

Current XenServer storage paradigm

In a traditional XenServer virtualization scenario, you have 2 choices to store your VM disks: Local storage (local HDD/SSD) or Shared storage (NAS/SAN).

Local storage usage can be simple, cheap and fast. But not really appropriate in a pool of hosts:

- Each time you need to migrate, it can take ages to transfer the disk content to another host.

- If your host disk is destroyed, you'll lose everything on it.

- no HA possible on hypervisor level.

What about a Shared storage? That's great to migrate VMs fast (only the RAM), it's XenServer HA compatible and you have one central point to connect to. But on the other hand:

- it's a dedicated piece of equipment (cost, maintenance etc.)

- it's not scalable directly (it's not growing with your XenServer hosts)

- it's a bit tricky when you have maintenance on your NAS/SAN

The situation can be sum up like this:

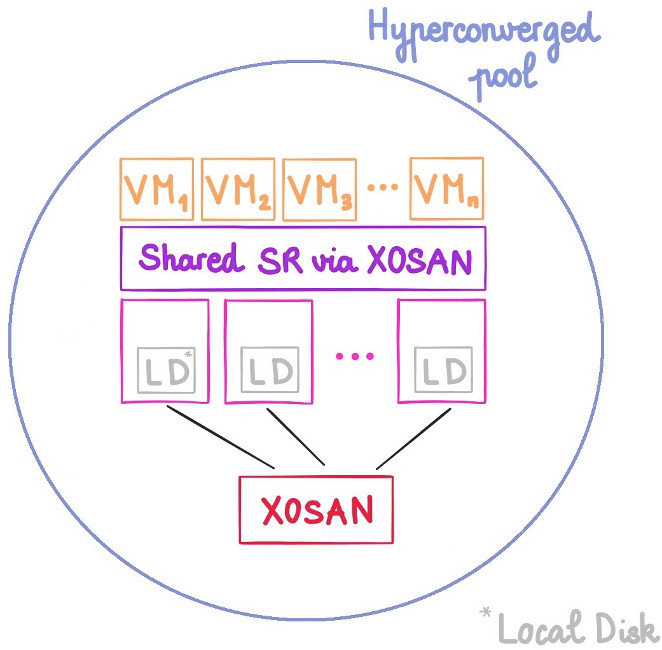

Hyperconvergence

The goal of hyperconvergence is to merge best of both worlds (local and shared storage). Each host will be a part of a global storage, in addition to its traditional compute role.

What does it look like?

XenServer Hyperconverged

So you want to achieve that with XenServer? Good news, we started to work on XOSAN.

The goal of XOSAN is to provide a simple way to turn all your local storage of XenServer into a shared storage within the pool. With the help of Xen Orchestra :)

It will bring you:

- Data security (content replicated on multiple hosts)

- Fast VM migration (just the RAM) between hosts because of the "shared storage"

- Data scalability (size, performance and security)

- High availability (if one host is down, data is still accessible from the others)

- Working with XenServer HA on this shared storage to automatically bring back to life VMs that were on the faulty host.

Initial tests

Tests have been done with the smallest setup possible (2 hosts). With almost the worst most common hardware you can find:

- 2x XS7 hosts, installed directly on 1x Samsung EVO 750 (128 GiB) each

- dedicated 1Gbit link between those 2 machines (one Intel card, the other is a cheap Realtek)

- data replication on both nodes

As you can see, cheap SSDs and 1Gbit network only. We'll be able to analyze performances against a NAS.

The "competing" NAS is a Debian box with ZFS RAID10 (6x500GiB HDDs) and 16GiB of RAM.

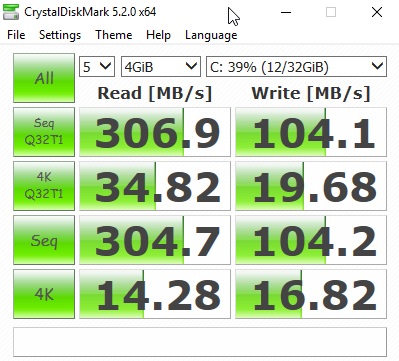

Let's do a quick benchmark into a VM created on this XOSAN storage (Windows 2016 + CrystalDisk benchmark on 4GiB):

| ZFS NAS | XOSAN | diff | |

|---|---|---|---|

| Sequential reads | 122 MB/s | 307 MB/s | +150% |

| 4K reads | 14 MB/s | 35 MB/s | +150% |

| Sequential writes | 106 MB/s | 104 MB/s | draw |

| 4k writes | 8 MB/s | 20 MB/s | +150% |

Not bad: 2.5x faster for read speed, 4k read speed and write speed. Sounds logical for writing on a SSD, but remember they are replicated on both hosts!

How it possible to have more than 1Gbit speed on a Gbit network for read speed? That's because the content is on both hosts. And the VM running the benchmark can read "locally" the data without be slowed down by the network. Pretty cool eh?

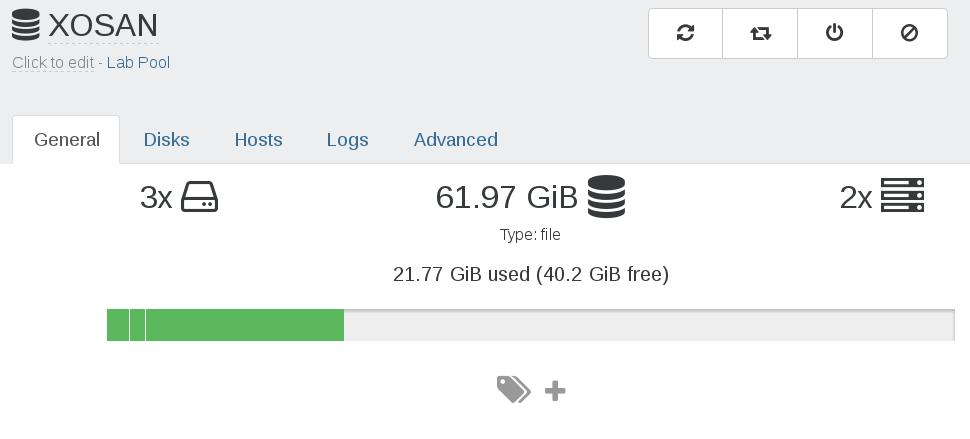

Thin provisioned

Also, because using a file backend, this "shared SR" is thin provisioned:

More than 2 nodes

This is smallest scenario possible, but next tests will come in larger installation. It will be to use not just replication but stripping data to multiple disks/hosts (like a giant RAID, sort of).

Closed beta planned

We'll create a closed beta for XOA users early in 2017, for people who want to try it on non-production setup.

In this way, we'll be able to validate the concept at a larger scale and streamline the process until reaching a full featured product.

Future of XenServer and hyperconvergence

The current tested setup is a first step to validate the concept. It's far from being automated yet, and some pieces will require a bit of work to stay easy to deploy.

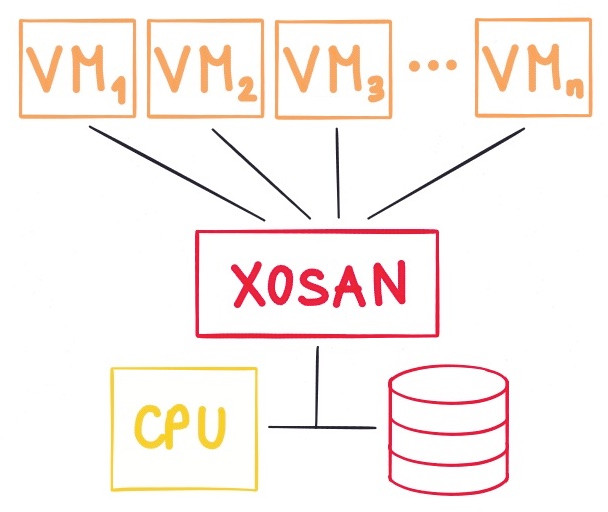

But the fantastic work of XenServer and XAPI teams on the future modular storage stack will be a very good base for pushing XOSAN to another level.

SMAPIv3 diagram

Stay tuned for the Closed Beta program :)