Next-gen XO backup engine: beyond VHD

Discover how we’re building a smarter, format-agnostic backup system to meet the demands of tomorrow’s infrastructure.

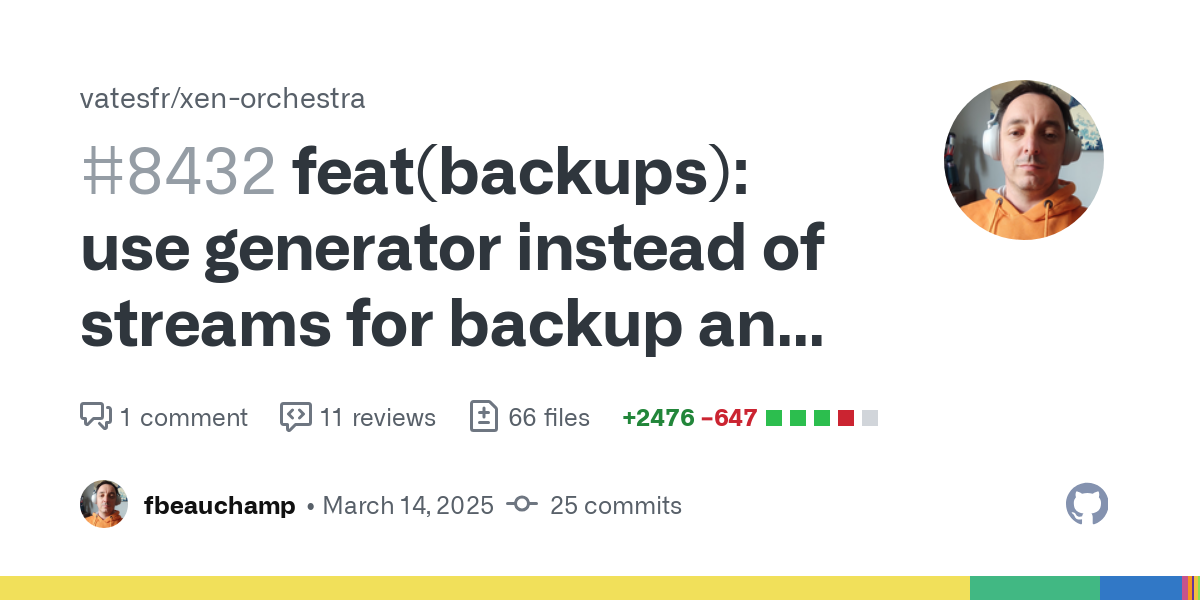

If you've been keeping an eye on our community forum, you may have spotted a recent thread inviting users to test a brand new backup engine in Xen Orchestra:

That’s right: we’ve been working behind the scenes on a major revamp of how XO handles backups, and it’s now far enough along that we can share more details.

This is more than just a refactor. It’s a foundational redesign that opens the door to bigger, faster, and more flexible backup workflows, and it's built to support the future of virtualization at scale.

Why change the backup engine at all?

Today, when Xen Orchestra performs a backup (whether from XAPI or via a VMware import), it transforms the data into a VHD stream. This approach has served us well, offering solid performance and flexibility. But it comes with one critical limitation: VHD streams simply can’t handle virtual disks larger than 2TB.

This isn’t a bug: it’s a design constraint of the format. Each offset in the VHD allocation table is a 32-bit value pointing to 512-byte sectors. Do the math, and you hit that 2TB ceiling pretty quickly.

As more users run larger and more complex environments, this limitation becomes a blocker — not just for backups, but for restores, V2V migrations, and imports. On top of that, our current implementation has grown increasingly complex. We’re juggling multiple disk formats — various VMDKs, VHDS, and now QCOWs — with different logic paths for backups, restores, V2V, browser uploads, and more.

Over time, this led to duplicated code, inconsistencies in block size handling (2MB for VHD, 64KB for CBT, and everything from 512B to 64KB for VMDKs), and an increasingly tangled web of logic. Adding support for QCOW disks would mean wiring yet another format into all those separate flows — in a non-typed codebase with hundreds of thousands of lines. Not ideal.

So, we stepped back and decided to rethink the architecture from the ground up.

How we’re doing it

The new engine is being built with clarity and modularity in mind. We’ve introduced base classes to represent disks in a consistent way, regardless of the source or format. These are written in TypeScript, unit tested, and designed to be composable — a big step forward in maintainability.

We also moved a lot of low-level logic, like CBT and NBD handling, into their own dedicated classes. That makes the whole system easier to understand, test, and extend.

Instead of relying on Node.js streams, we’ve adopted generators to process data in a linear, step-by-step fashion. This makes the code much easier to follow, and more importantly, helps us handle errors exactly where they happen — a key improvement for reliability.

Right now, the new engine can read from a variety of sources — XAPI streams, NBD+CBT, backup repositories — and write either individual blocks or full VHD streams. It’s already a big leap forward, and you can explore the progress in these pull requests:

What to expect and what to watch for

Even with a wide test campaign and help from our amazing community, we know that we haven’t yet tested every combination or edge case. The most visible issues would be backup job errors. But more subtle bugs, like slight shifts in binary stream parsing, could break backups without being immediately obvious.

There’s also the risk of mishandling disk chains — restoring the wrong data if the chain isn’t rebuilt correctly. That’s why it’s more important than ever to run health checks and test your restores regularly.

If you do encounter any issues, please report them with as much detail as you can. It helps us tremendously.

What’s next?

As soon as XAPI exposes the needed endpoints for QCOW export/import (very soon!), we’ll unlock one of the biggest benefits of this new architecture: incremental backup and replication support for disks over 2TB.

Beyond that, we’re planning to rewrite other parts of the system — like V2V, disk import, and VM import — to reuse this new code path. That will make our handling of large and diverse disks much simpler and more robust.

And this is just the beginning. These changes set the stage for closing the gap with the biggest names in the backup space — with a clean, powerful, open-source engine at the core.

Stay tuned

We’re really excited about what this unlocks, and we’ll let you know as soon as it lands in a stable release. Until then, you can keep an eye on the GitHub progress or join the discussion on our community forum:

Thanks for your continued support, and for helping us build the future of open-source backup!